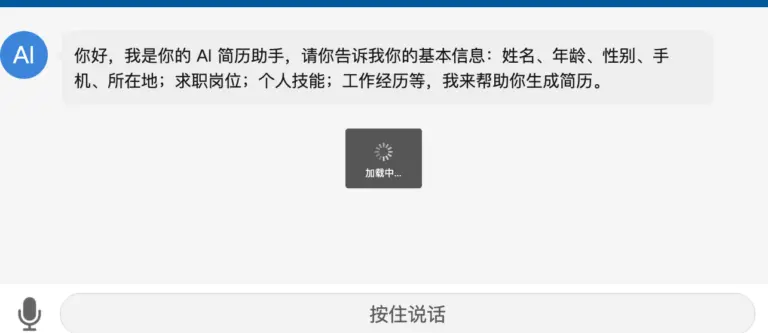

H5、uniapp使用火山引擎-流式语音识别,进行语音转文字。后端使用go语言,我使用的是前端vue中录制,然后讲音频数据传递给go,go在直接上传返回识别的文字内容。

前端实现: 在前端,使用recorder-core插件来实现录制MP3文件。

npm install recorder-core 完整代码如下:

<template> <view class="ar-footer"> <slot> <view class="ar-footer-button"> <image class="ar-footer-img" :src="keyboardPng" v-if="mode === 1" @click="setMode(2)" /> <image class="ar-footer-img" :src="voicePng" v-else @click="setMode(1)" /> </view> <view class="ar-footer-wrapper"> <view class="ar-footer-text" v-if="mode === 1"> <input type="text" class="ar-footer-input" v-model="text" placeholder="输入文字..." @keydown="handleKeydown" /> <view class="ar-footer-send" @click="send">发送</view> </view> <button class="ar-footer-voice" v-else @touchstart="startVoiceRecord" @touchend="endVoiceRecord" @mousedown="startVoiceRecord" @mouseup="endVoiceRecord">按住说话</button> </view> </slot> </view> </template> <script setup lang="ts"> import { ref } from 'vue' import keyboardPng from '../../../static/ai-images/keyboard.png' import voicePng from '../../../static/ai-images/voice.png' import { getPartnerList } from '@/api/ars_api'; import Recorder from 'recorder-core' import 'recorder-core/src/engine/mp3' import 'recorder-core/src/engine/mp3-engine' import 'recorder-core/src/extensions/waveview' const mode = ref(1) const text = ref('') const props = defineProps({ onSend: { type: Function, required: true } }) // 处理键盘事件 const handleKeydown = (event: KeyboardEvent) => { if (event.key === 'Enter') { send() } } const setMode = (val: number) => { if (val === 2) { recOpen(); } else { rec.close(); rec = null; } mode.value = val } const send = () => { props.onSend(text.value) text.value = '' } // 模拟按住说话功能 let rec: any; let wave: any; const startVoiceRecord = async () => { if (!rec) { console.error("未打开录音"); return } rec.start(); console.log("已开始录音"); }; const endVoiceRecord = () => { if (!rec) { console.error("未打开录音"); return } rec.stop(async (blob: Blob, duration: number) => { const result = await getPartnerList(blob); props.onSend(result.data.result[0].text) text.value = '' }, (err: any) => { console.error("结束录音出错:" + err); rec.close(); rec = null; }); }; const recOpen = async () => { try { rec = Recorder({ type: "mp3", sampleRate: 16000, bitRate: 16, onProcess: (buffers: any, powerLevel: any, bufferDuration: any, bufferSampleRate: any, newBufferIdx: any, asyncEnd: any) => { // 可实时绘制波形,实时上传(发送)数据 if (wave) wave.input(buffers[buffers.length - 1], powerLevel, bufferSampleRate); } }); // 打开录音,获得权限 rec.open(() => { console.log("录音已打开"); if (wave) { // 创建音频可视化图形绘制对象 wave = Recorder.WaveView({ elem: wave }); } }, (msg: string, isUserNotAllow: boolean) => { console.log((isUserNotAllow ? "UserNotAllow," : "") + "无法录音:" + msg); }); } catch (error) { console.error('无法获取麦克风权限:', error); } } </script> ars_api上传接口:

...